Markov chain model

https://colah.github.io/posts/2015-08-Understanding-LSTMs/

https://www.tensorflow.org/tutorials/sequences/recurrent

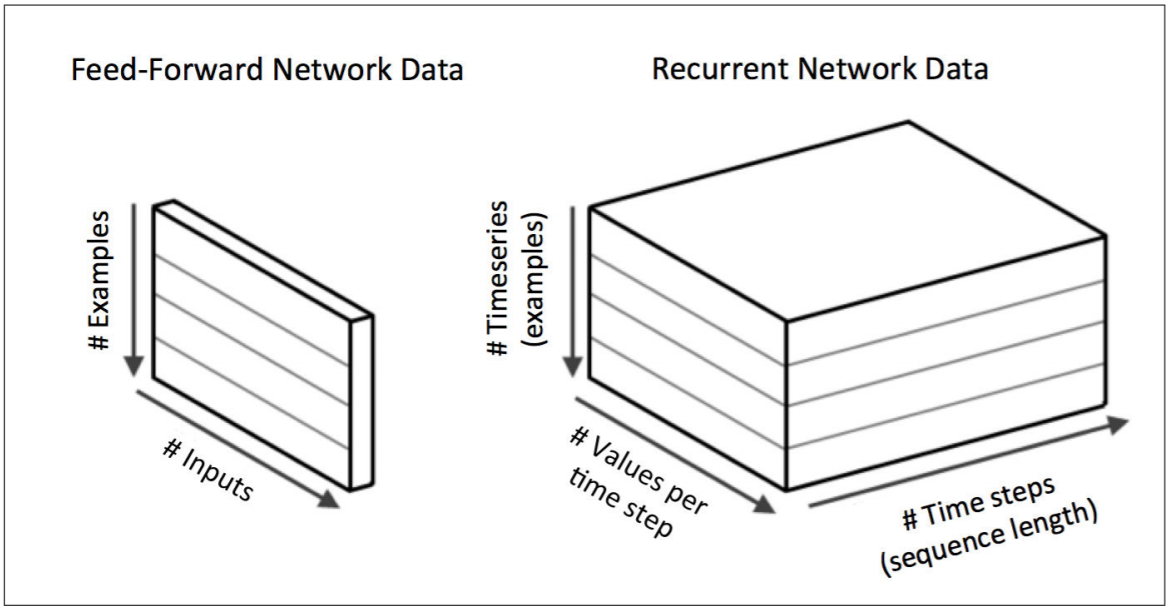

在使用神经网络时,很重要的一点是正确的处理数据维度。Recurrent Neural Networks 输入数据一般使用 3D 容积输入

- Mini-batch Size 一批多少个数据

- Number of columns in our vector per time-step 每一步向量里多少列

- Number of time-steps 多少步

LSTMs

梯度消失问题 (vanishing gradient problem)

Gated recurrent unit (GRUs) are a gating mechanism in recurrent neural networks, introduced in 2014 by Kyunghyun Cho et al. Their performance on polyphonic music modeling and speech signal modeling was found to be similar to that of long short-term memory (LSTM). However, GRUs have been shown to exhibit better performance on smaller datasets. They have fewer parameters than LSTM, as they lack an output gate.

RMSprop optimizer

https://www.quora.com/Why-is-it-said-that-RMSprop-optimizer-is-recommended-in-training-recurrent-neural-networks-What-is-the-explanation-behind-it

http://ruder.io/optimizing-gradient-descent/

Hyperparameters

hidden_layer_size 如何选择?

https://distill.pub/2016/augmented-rnns/

Comments