上一篇 TensorFlow - Convolutional Neural Networks 我们介绍了什么是卷积神经网络,本篇我们来使用 TensorFlow 的卷积神经网络工具进行 [MNIST][wiki/MNIST] 手写数字识别模型练习。

https://www.tensorflow.org/versions/r1.0/get_started/mnist/pros

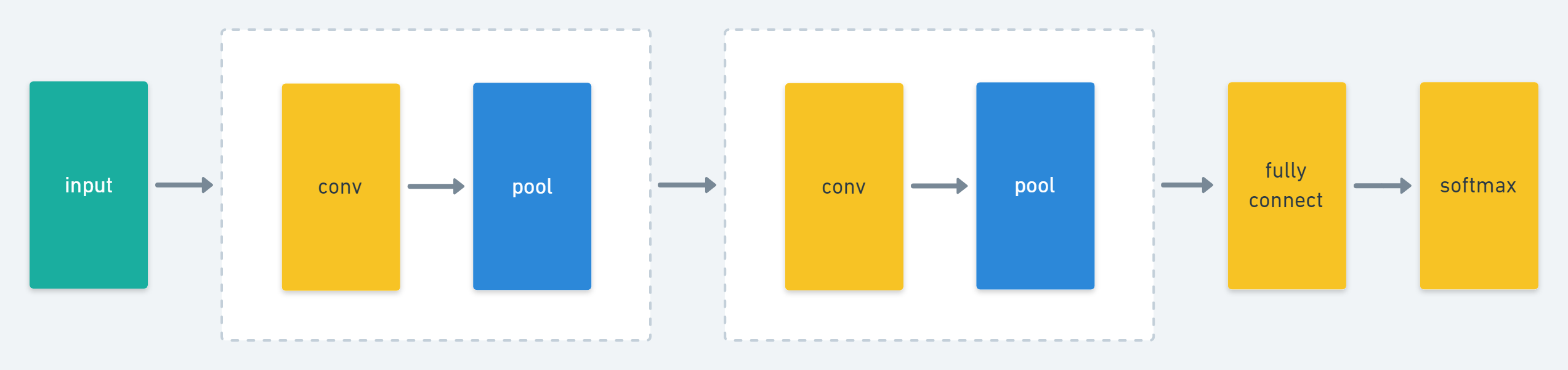

two pooling and two convolutional interleaved, followed by a fully connected layer (with dropout, p = 0.5) and a terminal softmax.

MNIST Dataset

完整代码

import tensorflow as tf

from tensorflow.examples.tutorials.mnist import input_data

DATA_DIR = '/tmp/mnist-data'

mnist = input_data.read_data_sets(DATA_DIR, one_hot=True)

sess = tf.InteractiveSession()

def weight_variable(shape):

initial = tf.truncated_normal(shape, stddev=0.1)

return tf.Variable(initial)

def bias_variable(shape):

initial = tf.constant(0.1, shape=shape)

return tf.Variable(initial)

# generates a convolutional layer with a particular shape

def conv2d(input, weight_shape, bias_shape):

ins = weight_shape[0] * weight_shape[1] * weight_shape[2]

weight_init = tf.random_normal_initializer(stddev=(2.0/ins)**0.5)

W = tf.get_variable("W", weight_shape, initializer=weight_init)

bias_init = tf.constant_initializer(value=0)

b = tf.get_variable("b", bias_shape, initializer=bias_init)

# set the stride to be 1

conv_out = tf.nn.conv2d(input, W, strides=[1, 1, 1, 1], padding='SAME')

return tf.nn.relu(tf.nn.bias_add(conv_out, b))

# generates a max pooling layer with non-overlapping windows of size k

def max_pool(input, k=2):

return tf.nn.max_pool(input, ksize=[1, k, k, 1], strides=[1, k, k, 1], padding='SAME')

def layer(h_pool2_flat, weight_shape, bias_shape):

W_fc1 = weight_variable(weight_shape)

b_fc1 = bias_variable(bias_shape)

h_fc1 = tf.nn.relu(tf.matmul(h_pool2_flat, W_fc1) + b_fc1)

return h_fc1

def inference(x, keep_prob):

x = tf.reshape(x, shape=[-1, 28, 28, 1])

with tf.variable_scope("conv_1"):

conv_1 = conv2d(x, [5, 5, 1, 32], [32])

pool_1 = max_pool(conv_1)

with tf.variable_scope("conv_2"):

conv_2 = conv2d(pool_1, [5, 5, 32, 64], [64])

pool_2 = max_pool(conv_2)

with tf.variable_scope("fc"):

pool_2_flat = tf.reshape(pool_2, [-1, 7 * 7 * 64])

fc_1 = layer(pool_2_flat, [7*7*64, 1024], [1024])

# apply dropout

fc_1_drop = tf.nn.dropout(fc_1, keep_prob)

with tf.variable_scope("output"):

output = layer(fc_1_drop, [1024, 10], [10])

return output

# Train and Evaluate the Model

x = tf.placeholder(tf.float32, shape=[None, 784])

y_ = tf.placeholder(tf.float32, shape=[None, 10])

keep_prob = tf.placeholder(tf.float32)

y_conv = inference(x, keep_prob)

cross_entropy = tf.reduce_mean(

tf.nn.softmax_cross_entropy_with_logits(labels=y_, logits=y_conv))

train_step = tf.train.AdamOptimizer(1e-4).minimize(cross_entropy)

correct_prediction = tf.equal(tf.argmax(y_conv,1), tf.argmax(y_,1))

accuracy = tf.reduce_mean(tf.cast(correct_prediction, tf.float32))

sess.run(tf.global_variables_initializer())

for i in range(10000):

batch = mnist.train.next_batch(50)

if i%100 == 0:

train_accuracy = accuracy.eval(feed_dict={

x:batch[0], y_: batch[1], keep_prob: 1.0})

print("step %d, training accuracy %g"%(i, train_accuracy))

train_step.run(feed_dict={x: batch[0], y_: batch[1], keep_prob: 0.5})

print("test accuracy %g"%accuracy.eval(feed_dict={

x: mnist.test.images, y_: mnist.test.labels, keep_prob: 1.0}))

http://ufldl.stanford.edu/wiki/index.php/Softmax%E5%9B%9E%E5%BD%92

https://nolanbconaway.github.io/blog/2017/softmax-numpy

Comments